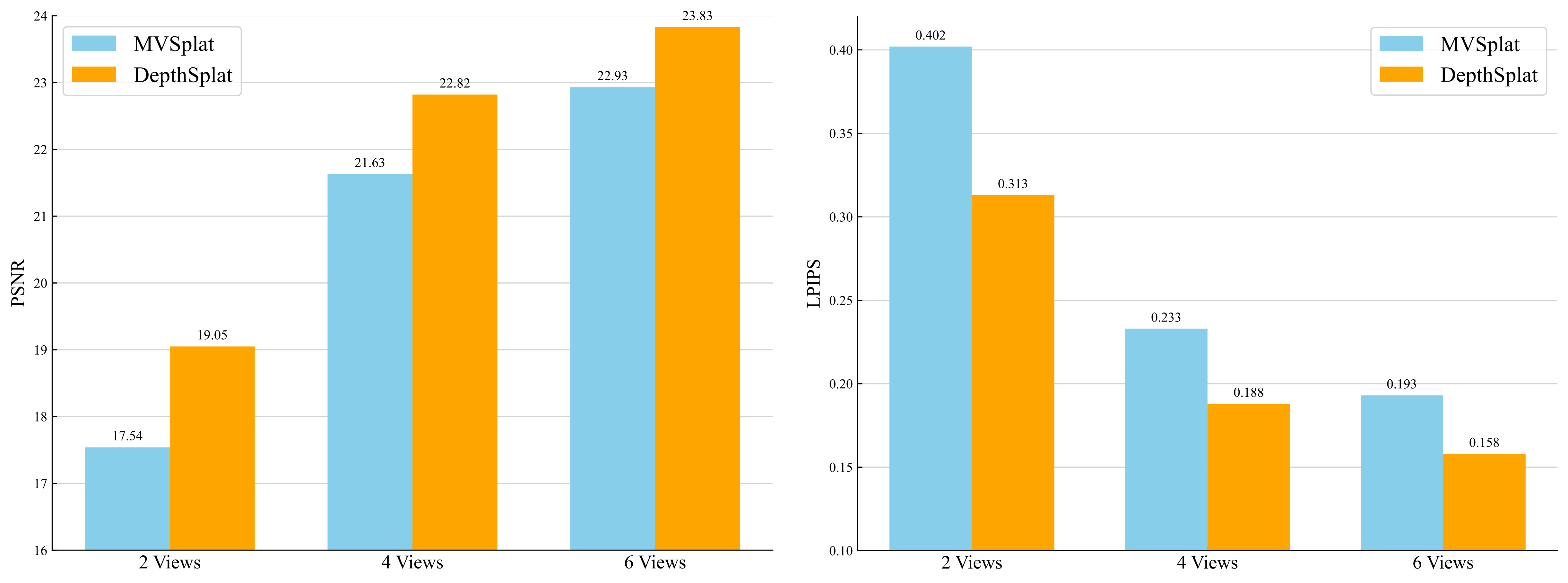

@inproceedings{xu2024depthsplat,

title = {DepthSplat: Connecting Gaussian Splatting and Depth},

author = {Xu, Haofei and Peng, Songyou and Wang, Fangjinhua and Blum, Hermann and Barath, Daniel and Geiger, Andreas and Pollefeys, Marc},

booktitle={CVPR},

year={2025}

}|

|